Table of Contents

Research Activities

Ongoing research lines address the platform challenges of large-scale, distributed cloud environments:

Data-centric Cloud Architecture

Existing cloud and data center infrastructures follow a traditional compute-centric approach where data is brought to the compute nodes. This paradigm works well for compute-intensive, High Performance Computing applications but not for applications that are data-intensive.

There is a paradigm shift from computational to data exploration with a growing demand for storing, processing and sharing of large data sets that requires infrastructures to be data-centric in order to minimize data movement by bringing compute to data.

This research line addresses challenges of implementing a data-driven approach in cloud management.

Cloud Computing for Edge Analytics

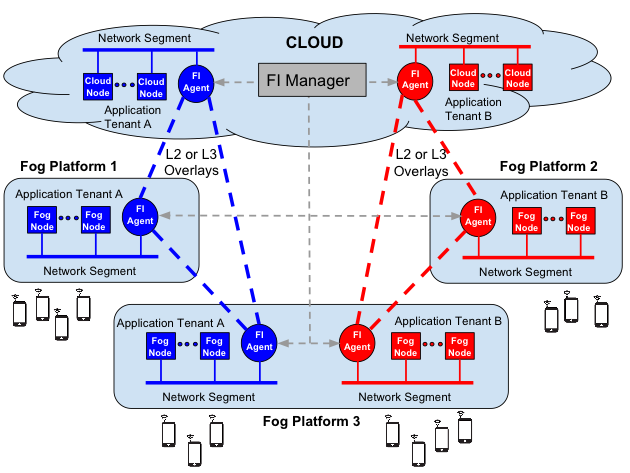

The Internet of Things (IoT) is drastically changing our homes and cities by connecting every kind of device to the Internet. IoT is setting up the basis to build an environment able to perform optimally and react to our needs.

In order to make this vision a reality, we need to develop alongside a new computing infrastructure able to cope with massive device connectivity, and flexible enough to address the requirements of a diverse set of devices and their associated applications. Communication latency reduction and management will define the future of applications like IoT, video streaming, gaming and many mobile applications.

Centralized clouds are appropriate for services with limited data communication, such as the Web, or for batch processing, but not for applications that require moving large amounts of distributed data or with interactive users that require low latency and real time processing. Meeting these latency demands requires bringing resources as close to the IoT devices as physically possible.

This research line is addressing the challenges of building or using distributed clouds to co-locate data processing services across different geographies to provide the required Quality of Service (QoS) and functionality.

Distributed Cloud Computing

Many experts have suggested that the private cloud will soon die out. However they forget the high fragmentation of the data center ecosystem, the security and performance benefits of having a local private infrastructure, mostly for the upcoming big data growth, and the high number of applications that are not suitable for the public cloud model. Moreover, many private data centers have the sufficient scale to be able to achieve the same cost benefits than big clouds.

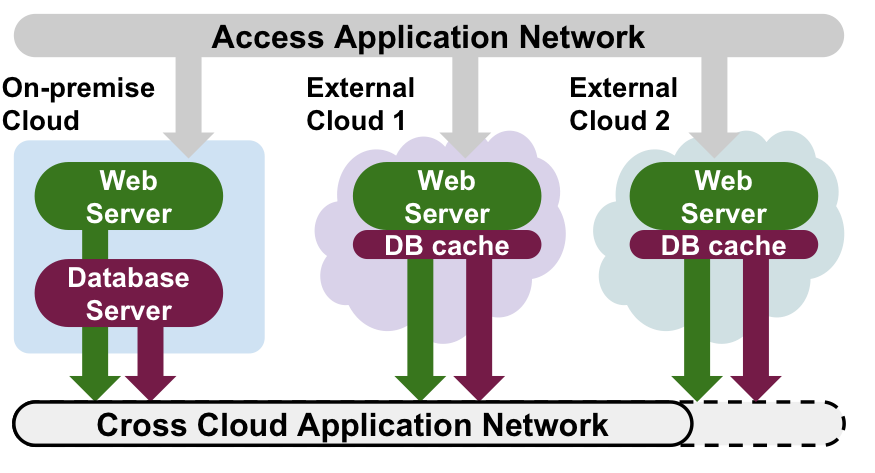

Cloud computing is moving away from large centralized infrastructures to distributed cloud computing environments that enable organizations to find the right combination of cloud services to efficiently execute their workloads and meet their cloud needs of tomorrow.

Distributed cloud computing encompasses hybrid cloud, where a private cloud infrastructure combines with outsourced resources from one or more external public clouds, federated cloud, where clouds managed by different organizations federate to allow users to utilize any of the connected clouds, and edge cloud, where computation is performed at the edge of the network to reduce latency overhead.

This research line is addressing the challenges of combining several clouds to build the dynamic, agile and decentralized environments needed to meet the security, performance or cost needs of future workloads, such as geographically distributed multi-cloud applications, high-availability across data-centers, location aware elasticity among different clouds, etc.

Federated Cloud Networking

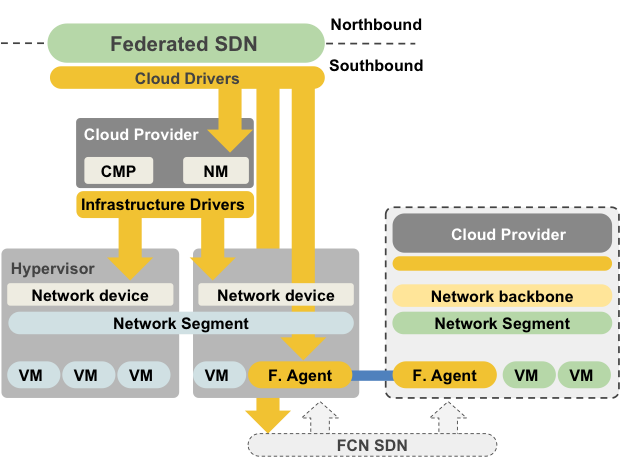

Cloud federation enables cloud providers and datacenters to collaborate and share their resources to create a large virtual pool of resources at multiple network locations.

Different types of federation architectures for clouds and data centers have been proposed and implemented (e.g. cloud bursting, cloud brokering or cloud peering) with different level of resource coupling and interoperation among the cloud resources, from loosely coupled, typically involving different administrative and legal domains (e.g. federation of different public clouds), to tightly coupled federation, usually spanning multiple datacenters within an organization.

This line is researching on network federation techniques to enable the automatic provision and management of cross-site virtual networks for federated cloud infrastructures, to support the deployment of applications and services across different clouds and datacenters.

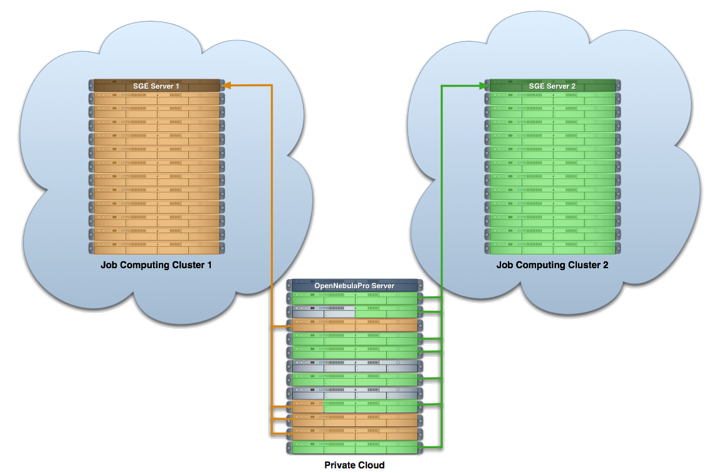

High Performance Computing (HPC) Cloud

Science clouds provide access to flexible and elastic scientific and technical computing to solve complex problems and drive innovation.

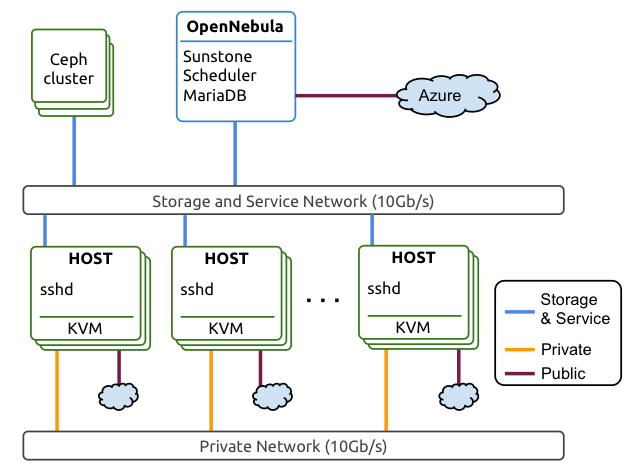

Many supercomputing and research centers are building private clouds for hosting virtualized computational environments, such as batch farms and computing clusters, or for providing users with new “HPC as a service” resource provisioning models.

The research line addressed the challenges of designing cloud architectures to enable the execution of flexible and elastic cluster and high performance computing services on demand while reducing the associated cost of building the datacenter infrastructure.

Past Research Activities

Our past work includes these research activities:

Metascheduling and Efficient Application Execution

Efficient execution of workflows, efficent execution of divisible workloads, advance meta-reservation functionality and state-of-the-art scheduling policies.

Federation of Grid Infrastructures and Utility Computing

Federation of grid architectures, allowing companies and research centers to access their in-house, partner and outsourced computing resources via automated methods using grid standards in a simpler, more flexible and adaptive way.

Real-Time Java

Extensions of the memory management system provided by the Real-Time Java Specification to meet real-time requirements.